PIM For Thoughts Planning

Moving from a PIM for memorization to a PIM for action.

The subtitle of this PIM currently is "Notes to a future self."

Notes are thus expected to be used later but in the same way that MemoryRecipe create recall in a timely manner, other notes in the PIM could prompt for action in a calendar.

See also

Scientifically oriented PIM

Setting up a personal epistemological lab.

Principle

The "power" of scientists is their method since their tools have being democratized through the Internet and open source software, why limit this power to specific field rather than generalize it?

One can literally use the tools a government was using 50 years ago to make decision to manage his house today why not do so?

Steps

Before making a large decision like voting (cf Seedea:Utopiahanalysis/Politicsnewoldtools ), buying a house (cf House ) or changing his career one should explicit his hypothesis.

), buying a house (cf House ) or changing his career one should explicit his hypothesis.

Once his hypothesis (cf MyBeliefs, Needs and Seedea:Content/Predictions ) in stated in his PIM, they can be tested, challenged, updated with statistics and other tools (cf Mathematics#Statistics or Seedea:Seedea/AImatrix

) in stated in his PIM, they can be tested, challenged, updated with statistics and other tools (cf Mathematics#Statistics or Seedea:Seedea/AImatrix Seedea:Seedea/Simulations

Seedea:Seedea/Simulations ) and Open Data websites like data.gov data.gov.uk dbpedia.org insee.fr and such (cf Seedea:Seedea/DATAmatrix

) and Open Data websites like data.gov data.gov.uk dbpedia.org insee.fr and such (cf Seedea:Seedea/DATAmatrix )

)

Eventually once the workflow and the tools are set up they could be used on a more daily basis and thus for more casual decisions.

See also

Inspired by

Discussion during the CollegeDeFranceColloqueDeRentree2010 regarding evidence-based and evidence-informed political decision making and the dissemination of knowledge and Irssi#LogsSocialBehaviors.

Addressing (as named address spaces)

- having a proper addressing and search is close to be the only things that matter

- how to properly design an address space? how to define URIs?

- what are the epistemological consequences of each design choice?

- references

Facilitate external contributions

Personalized views

- friends overlay that would directly point to content rather than description

- already used with user detection and localhost links

- explicitly handle shared content

- origin of the visitor

- (:if match "/benetou/{$ReturnLink}/":) c'est local! (:else:) ca vient d'ailleur ! (:ifend:) attempt in HomePage

- last events displayed in HomePage

Poka-yoke supported by new tools

(in particular programming frameworks and running environments)

- example of batch edits

- faster at usage by bad for history parsing

- see also

- NodeJS

- mainly discussion at 22:10 on freenode/#wiki on 03/07/2010

- ReadingNotes/LeanThinking

Perpetual re-invention

It is required, not simply desired.

- as my PIM get filled I tend to just add links to existing material instead of adding content

- e.g. when reading a book (like TenDayMBA) instead of writing down my view I simply link to a related page for this or that chapter

- http://www.ourp.im/Papers/LimitsOfNotReinventingTheWheel

- your PIM (wiki or not) is efficient for you not just because you like it but because you shaped it based on your own way of thinking

- when your way of thinking changes (thanks to it or thanks to other tools and events) you change it

- this seems to be very different from the above mentionned branded tools

- structural changes while maintaining historical coherence

- leveraging randomness

- combinatorial creativity

- ReadingNotes#RandomPageToImprove

- improvements

- update the Edition menu @pub/skins/glossyhue/edit.tmpl@ accordingly

- dedicated quick category adding option

- eventually with a specific style block

Optimization, safety and maintenance

With few edits a day at worst and as lags piles up and focus is lost, time and attention is wasted.

Optimization

of performance

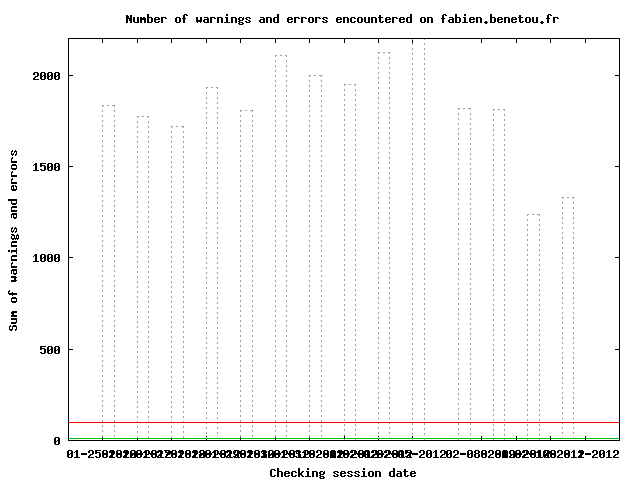

- measurement of performances/resources

- see Numbers and PIM/Testing

- use cron/wget query random pages

- measure time of query, log latency, ...

- cognition and physiological delays

- time to parse a page

- time to input a piece of information

- based on the device (mouse, keyboard, brain imaging, ...)

- Note that the speed comparison between Emotive/OpenEEG/whatever with keyboard binary for choices might not matter so much if the reading and the decision time is on another scale than the input (e.g. 5sec vs0.5sec or 0.1sec)

- Wikipedia:Muscle memory

- solutions

- deactivated

graphprocessing and gp_pageview_withingroup removed up to 3 seconds (!) according to time wget URL tests

- integrated frameworks

Safety

- remote automated backups (for failsafe and integrity)

- Crontab of

rdiff-backup

- fail safe recovery mechanism

- configuration files includes machine check (using

hostname) and update the path accordingly

- allows to run mirrors without having to edit the configuration file each time, eventually set specific behaviors

- de-activate more functions that should only restricted to server instance

- e.g.

measure_close.php error

- keeping personal data

Maintenance

- maintenance

- follow the principle of self-model as discovered in BeingNoOne and partly implemented via BackEnd

localmap.txt and more largely OwnWikisNetwork

- but lacking priority, e.g. is Path:/repository/ more important than the group ReadingNotes?

- note that this might be context dependent

- should also take into account MyCloudTransition

- especially important after migrating (e.g. from RPS to Kimsufi but more generally is one were to follow Seedea:Oimp/CloudArbitrage

)

)

- one can wonder if there is an equivalent between this and pain as a physiological information process

- especially important to maintain flexibility

- if one is easily able to assess that everything is fine then one is most likely to safely try new possibilities

- cf ClickingMoments and how after all checks the new form passes all the tests

- nightly pass

- first pass as of 18/03/2011

- result available at Path:/pub/linkchecking.txt

- took about ~10hrs (without using

time)

- seems it checked too many links (~1845) e.g. ?action=print or ?action=edit

- next pass should keep the log in order to be able to use

diff or stats with grep and wc (also to keep a motivating goal)

- error on skin through http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd

- this should be corrected for next pass

- a default CA should be used to avoid "Can't verify SSL peers without knowning which Certificate Authorities to trust" on https queries

- making it a habit will let me find patterns and it will become less and less of a pain

- e.g. FranceCulture new URLs since ?

- find a way to display progresses (positive feedback)

- for this pass all the pages edited as of 18/03/2011 8AM will be considered checked yet not necessarily fully corrected (some website are down, this should be handled without necessarily being removed)

- Keywords "fake" links cause a many errors yet are not supposed to be browsed in the first place

- corrected via

http://server.tld:port format

- Innovativ.IT (thus Seedea:. ) return "Forbidden by robots.txt", this should be corrected to at least allow this parsing

- modified robots.txt to allow for W3C-checklink user-agent

- Events because of its Template makes queries over RT services, in general resulting to errors, if nothing is relevant has been found few weeks after or if it has become unreachable, those links should be removed

- Paused at http://fabien.benetou.fr/Content/Exercises?action=print

- current setup

- linkchecker in Python

W3C Link Checker Check links and anchors in Web pages or full Web sites

- encapsulating in

~/bin/wiki_linkchecking

- used Lighttpd URLrewrite when changes are done (at least 301 redirection)

- second pass as of 22/04/2011

- result available at Path:/pub/checking/

- took about ~15hrs (without using

time)

- no stats nor dashboard done yet

- to do

- split in two analysis

- limited to internal links (within this domain)

- internal and external (including other domains)

- add the encapsulating script to crontab, cf Cron

- support versionning to allow diff between each pass (e.g. commit after every page have been scanned)

- example

hg commit -m "pass 1 (2011-Apr-21)"

- facilitate mass correction when servers stop

- e.g. Google Video hosted content removed

- visualization of total errors, each type, per page, ...

- define ?action=selfcheck and make it available

- provide a link to the result of the last check directly in the template

- add a periodic global check via Crontab

- add an event-based check via incron or hooked on PmWiki page saving

- provide an RSS feed for notifications per wiki instance

- well documented process

- just at the link layer (sort of), add PHP, JavaScript, etc... too

- e.g. Trails PHP error as of April 2011

- copying

- fingerprint by finding the N (low, starting with even just 1) less common word (based on dictionaries)

- search for that most unlikely combination on the web

W3C CheckLink stats generator

$PAGESTAT = pub/checklink/stats.txt

echo "" > $PAGESTAT

for each PAGE in $(ls --order-by-size)

echo -n "$PAGE : 404" $(grep 404problem $PAGE | wc -l);

echo -n "$PAGE : 500" $(grep 404problem $PAGE | wc -l);

...

>> $PAGESTAT

done;

Always keeping Privacy Settings in mind.

Leverage consuming information

Do not limit to just adding information but also use implicit information from usage.

- parse the access logs

/var/log/lighttpd/access.log

- include visualization

Integration with versionned files

Note that thanks to Cookbook:ImportText PmWiki can already act as a non specialized RCS. Provide a direct access either through local links (on the file system, httpd, etc) or through full links thanks to an httpd interface.

Completion

- JavaScript to load completion in the textarea of EditForm

- sources

- foreach X in $(ls wiki.d/)

- grep "^text=" wiki.d/$X | sed "s/\[\[#/\n#/g" | grep "^#" | grep -v "|" | sed "s/^\(#[^ ]\+\)\]\].*/\r\1/g" | sort | uniq

- dynamic useful values, like the current date, location, etc

- other wikis but with links through InterMap

- potential solutions

- consider also a "portable" version Greasemonkey to carry across other pages outside of the wikis

- local test

- somehow works (no error and completion) yet no display of the proposals

- both in the textarea and in the text field

- consequently it is probably a problem specific to the EditForm form

- tried with a fresh PmWiki install, same problem

- the EditForm currently does not keep the author

(:wick_start:)

(:input form onsubmit="return checkFormWick()":)

(:input textarea rows=27 cols=80 focus=1 class="wickEnabled":)

Completion: (:input text size=80:)\\

(:input end:)

(:wick_end:)

Search

- (currently) using Google to search across Seedea+here with Keywords#brain

- date of the last crawl can be acquired thanks to grep BotName /var/log/lighttpd/access.log | grep robots.txt | grep subdomain.domain.tld | sed "s/.*\[\(.*\)\].*/\1/"

- frequency?

- see also ApacheProjects#Nutch for potential replacement

- dedicated page displaying

- external search engine results

- internal wiki results

- last searches

- Redirect PmWiki:PageListTemplatesPageListTemplates

- (:template last:)(:if equal {$$PageCount} 1:)(:redirect {=$FullName}:)(:ifend:)

Since then moved to Sphinxsearch.

Quality tagging

Principle

qualify the quality of the page itself and instantly know where you have to work on and ordered by priority

Note

- WatchingNotes#ExternalClassification detailing while it could be done outside of each page

- Implicit categories might be used to, since you can look for the absence of a category (really?)

- Categories name should be thought carefully as they could be used to make better restriction as PageList works by pattern matching

- templates with categories can be made and included in order to provide coherent visuals instead of solely a link

Available categories

- quality

- ToCheck

- ToSpellCheck

- Quality1

- Quality2

- LackOfReferences

- PoorStyle

- structure

- ToExpand

- ToSummarize

- ToExplode

- ForThesis

- ForPaper

- MergeWithGeneralWiki

- modelization for Cookbook.Cognition#OptimizableModelPerPage

- HasModel

- HasData

- ReachedOptimum

See also

Fabien Benetou's PIM

Fabien Benetou's PIM